Let's begin with a little bit of theory. The idea behind the filter is to allocate a bit vector of length m, initially all set to 0, and then choose k independent hash functions, h1, h2, ..., hk, each with range [1 m]. When an element a is added to the set then the bits at positions h(a)1, h(a)2, ..., h(a)k in the bit vector are set to 1. Given a query element q we can test whether it is in the set using the bits at positions h(q)1, h(q)2, ..., h(q)k in the vector. If any of these bits is 0 we report that q is not in the set otherwise we report that q is. The thing we have to care about is that in the first case there remains some probability that q is not in the set which could lead us to a false positive response.

The following class is a naive implementation of a Bloom Filter (pay attention: this implementation is not supposed to be suitable for production. It is made just to show how a Bloom Filter works and to study its behavior):

class Bloom:

""" Bloom Filter """

def __init__(self,m,k,hash_fun):

"""

m, size of the vector

k, number of hash fnctions to compute

hash_fun, hash function to use

"""

self.m = m

# initialize the vector

# (attention a real implementation

# should use an actual bit-array)

self.vector = [0]*m

self.k = k

self.hash_fun = hash_fun

self.data = {} # data structure to store the data

self.false_positive = 0

def insert(self,key,value):

""" insert the pair (key,value) in the database """

self.data[key] = value

for i in range(self.k):

self.vector[self.hash_fun(key+str(i)) % self.m] = 1

def contains(self,key):

""" check if key is cointained in the database

using the filter mechanism """

for i in range(self.k):

if self.vector[self.hash_fun(key+str(i)) % self.m] == 0:

return False # the key doesn't exist

return True # the key can be in the data set

def get(self,key):

""" return the value associated with key """

if self.contains(key):

try:

return self.data[key] # actual lookup

except KeyError:

self.false_positive += 1

The usage of this filter is pretty easy, we have to initialize the data structure with a hash function, a value for k and the size of the bit vector then we can start adding items as in this example:

import hashlib

def hash_f(x):

h = hashlib.sha256(x) # we'll use sha256 just for this example

return int(h.hexdigest(),base=16)

b = Bloom(100,10,hash_f)

b.insert('this is a key','this is a value')

print b.get('this is a key')

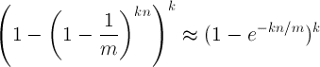

Now, the problem is to choose the parameters of the filter in order to minimize the number of false positive results. We have that after inserting n elements into a table of size m, the probability that a particular bit is still 0 is exactly

Hence, afer n insertions, the probability that a certain bit is 1 is

So, for fixed parameters m and n, the optimal value k that minimizes this probability is

With this in mind we can test our filter. The first thing we need is a function which tests the Bloom Filter for fixed values of m, n and k countinig the percentage of false positive:

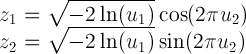

import random def rand_data(n, chars): """ generate random strings using the characters in chars """ return ''.join(random.choice(chars) for i in range(n)) def bloomTest(m,n,k): """ return the percentage of false positive """ bloom = Bloom(m,k,hash_f) # generating a random data rand_keys = [rand_data(10,'abcde') for i in range(n)] # pushing the items into the data structure for rk in rand_keys: bloom.insert(rk,'data') # adding other elements to the dataset rand_keys = rand_keys + [rand_data(10,'fghil') for i in range(n)] # performing a query for each element of the dataset for rk in rand_keys: bloom.get(rk) return float(bloom.false_positive)/n*100.0If we fix m = 10000 and n = 1000, according to the equations above, we have that the value of k which minimizes the false positive number is around 6.9314. We can confirm that experimentally with the following test:

# testing the filter

m = 10000

n = 1000

k = range(1,64)

perc = [bloomTest(m,n,kk) for kk in k] # k is varying

# plotting the result of the test

from pylab import plot,show,xlabel,ylabel

plot(k,perc,'--ob',alpha=.7)

ylabel('false positive %')

xlabel('k')

show()

The result of the test should be as follows

Looking at the graph we can confirm that for k around 7 we have the lowest false positive percentage.